Attempt #2

Parallel build and image versions of 🏃s

It is always a good idea to build in parallel to finish a build faster. GitHub

Actions also allows you to run multiple jobs in parallel during a workflow. The

key is needs. You can use it as follows:

jobs:

job1:

⋮

job2:

⋮

job3:

needs: [job1, job2]

⋮

job3 will start when both job1 and job2 are finished. Since job1, job2

and job3 will run on different instances of the runner, job1 and job2 have

to store the build results with actions/upload-artifact, and job3 should

retrieve them with actions/download-artifact. That is a typical approach to

running parallel jobs.

Parallel build of libraries for iOS

Here is a more practical example. The following workflow builds a library as an xcframework for iOS, which decrypts data encrypted with AES 128-bit in CBC mode:

jobs:

build_non_fat:

runs-on: macos-latest

timeout-minutes: 30

strategy:

max-parallel: 3

matrix:

abi: [x86_64, arm64]

sdk: [iphoneos, iphonesimulator]

exclude:

- abi: x86_64

sdk: iphoneos

steps:

⋮

install_and_test:

runs-on: macos-latest

timeout-minutes: 30

needs: build_non_fat

steps:

⋮

When all build_non_fat jobs finish, the install_and_test job starts. The following is a visualization of the workflow:

The first build_non_fat job executes three jobs†1 in parallel in the following configurations:

| ABI | SDK |

|---|---|

x86_64 |

iphonesimulator |

arm64 |

iphonesimulator |

arm64 |

iphoneos |

It is also possible to use CMake to create a fat library at once for the

iphonesimulator SDK that contains both x86_64 and arm64 ABIs. In that

case, we can reduce the number of jobs to two. However, since this project uses

assembler instructions (Intrinsics), the set of source files and compilation

options are different for each ABI. Therefore, the build_non_fat job has to

build the non-fat library separately. Each runner makes the build directory

containing the artifacts CMake builds and saves them with

actions/upload-artifact. The name of the build directory contains the ABI and

SDK, so downloading them in the next job will not conflict.

The second install_and_test job downloads all the artifacts of the build

directories with actions/download-artifact and performs the following

operations sequentially:

- Run the iOS simulator with

xcrunand test the non-fat library for theiphonesimulatorandx86_64†2 withctest. - Create the fat library for the

iphonesimulatorwithlipo. - Create the xcframework with

xcodebuild. - Install the xcframework, the non-fat library for

iphoneos, the fat library foriphonesimulator, and the header files.

It is somewhat complicated, but there is no need to understand all the details.

We use shell scripts and CMake they execute to build. CMake runs not only in the

first jobs but also in the second job†3, but it does not

create a build directory in the second job. The second job downloads the build

directories that the first jobs made, so it runs ctest and

cmake --target install on them, respectively.

†1 The combination of

x86_64andiphoneosis excluded byexclude.

†2 Due to using macOS runners with Intel architecture.

†3 We install the libraries (built in the first jobs) in a temporary location with

cmake --target installand then uselipoorxcodebuildfor them.

The versions of the runner image

However, when running such a workflow file, the build sometimes fails. The

build failures occurred when executing cmake or ctest in the second job. Why

did builds fail?

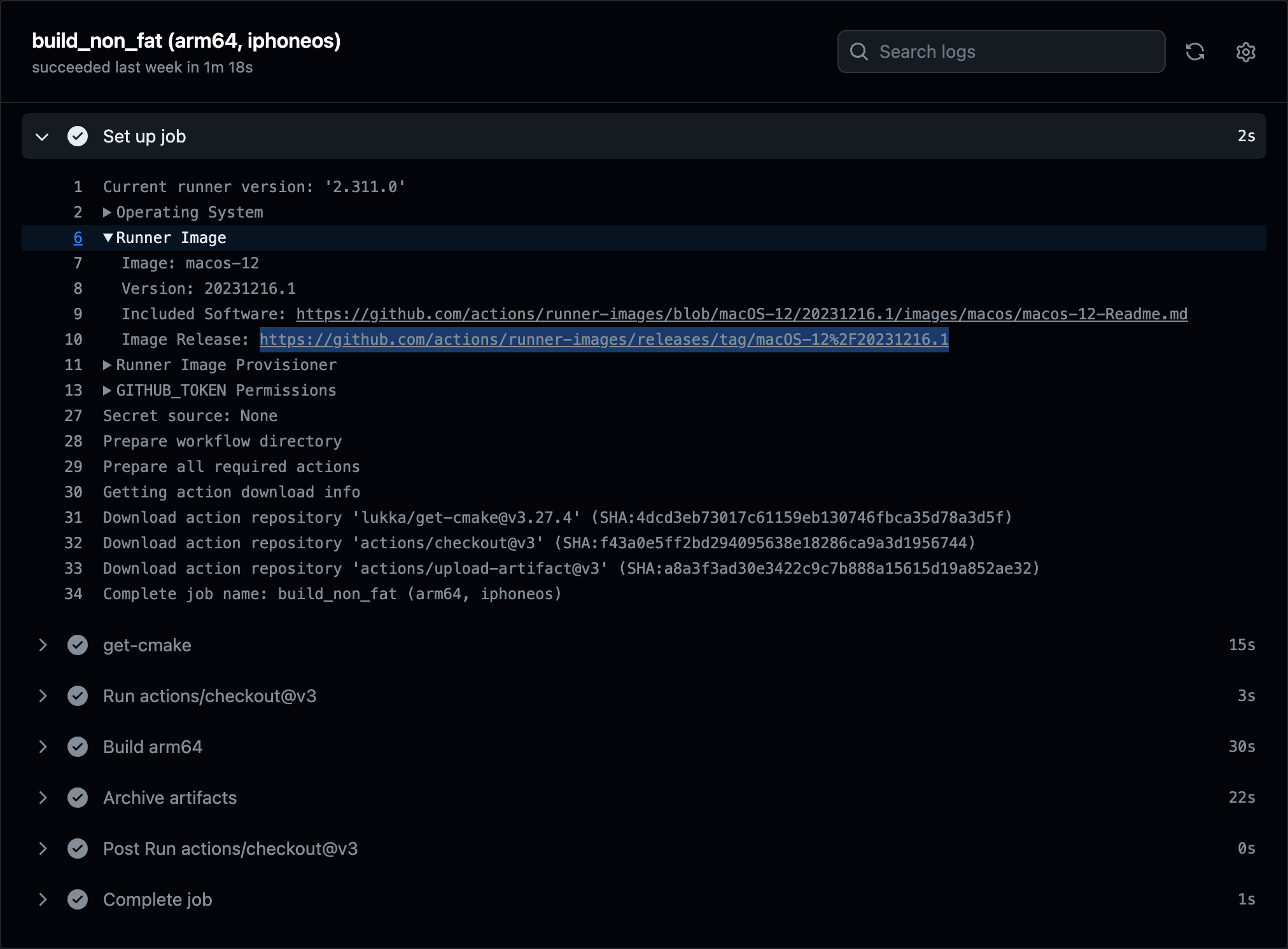

The first part of the build log in GitHub Actions keeps information about the runner. For example, if you expand the “Set up job” entry in any build log, the following appears:

That shows the runner image is macos-12, the version is 20231216.1, and

there are links about the image release. These links let us know what software

the maintainers installed and what changes they made in that runner. Image

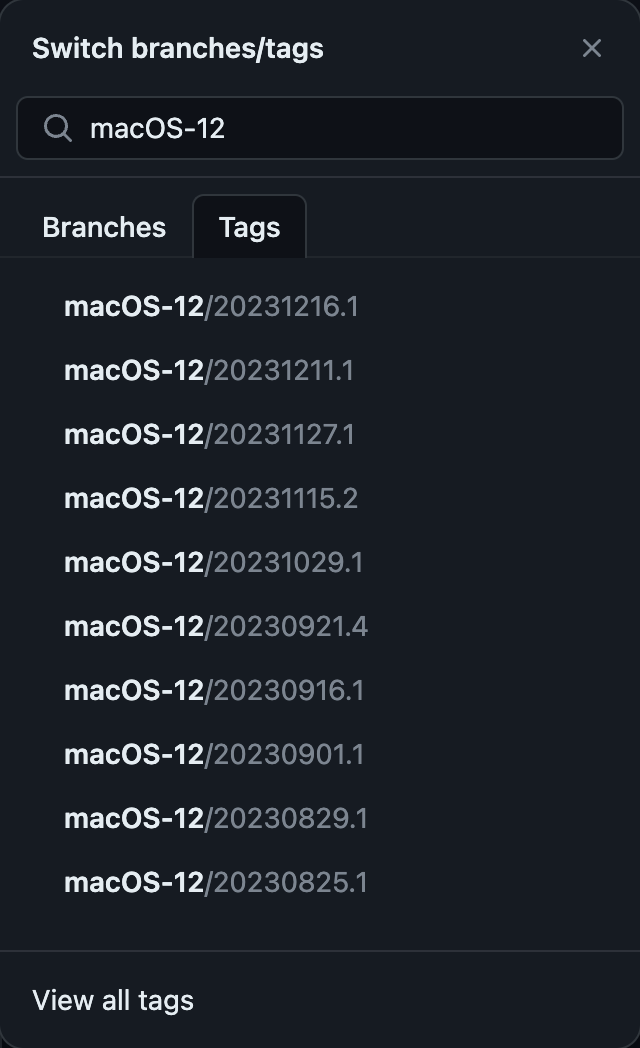

releases are tagged, so it is easy to see how often the image is updated as

follows:

It appears that there are updates about one to two weeks apart.

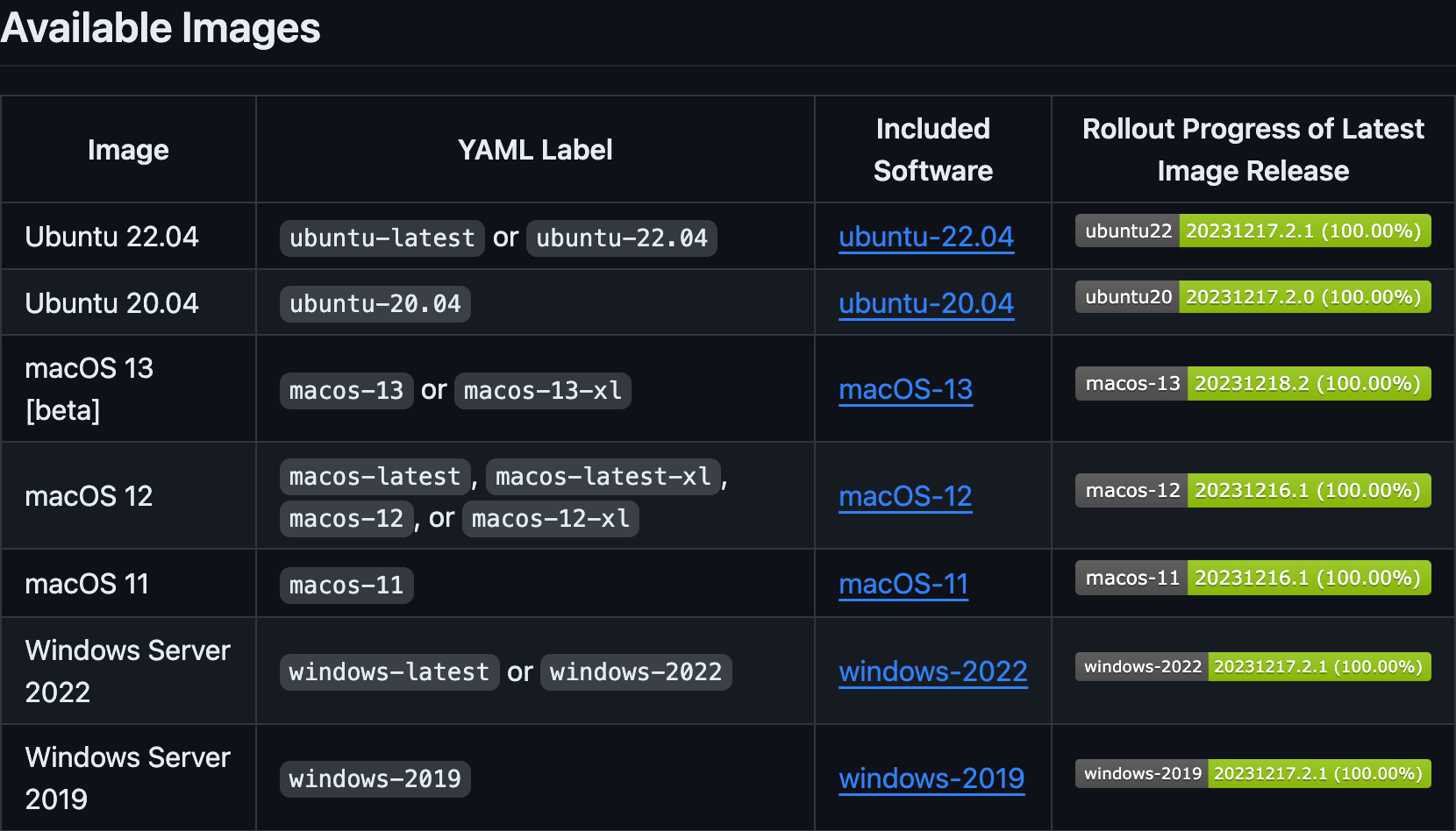

The README of that repository of runner images contains the following list of the latest available images:

There is a column titled Rollout Progress of Latest Image Release on the right

side of the table. This column shows the percentage of the latest version of the

image used. For example, if it is 100% for macos-12, it means all instances

use the same (latest) version of that image. Since all rows in the table above

are 100%, they were so that time.

However, it is not always 100% at all times. The following table is from another day:

As shown in this image, for example, if the rollout progress is 9.79% for

macos-12, there is a 90.21% chance that the instance will use one previous

(older) version of the image. If the image version differs, the installed

compiler, toolchain, SDK, and so on might also have a different version.

☕

What about the probability that multiple instances will all have the same version? For a runner with an image having 10% rollout progress and for two instances, the probability is (1 − 0.1)2 + 0.12 = 0.82, which is the sum of the probability that they are all the old version and the probability that they are all the new version. For four instances, the probability is (1 − 0.1)4 + 0.14 = 0.6561 + 0.001 = 0.6562. The probability that all images in the instances are the same version is about 2/3.

That is why the build failed. One of the multiple instances running in the build was from an old image, and (unfortunately) the version of CMake was different between that old image and the latest one. No wonder trying to test or install with the new CMake and the build directory created by the old CMake does not work.

The biggest problem was my assumption that the image of each runner would be identical.

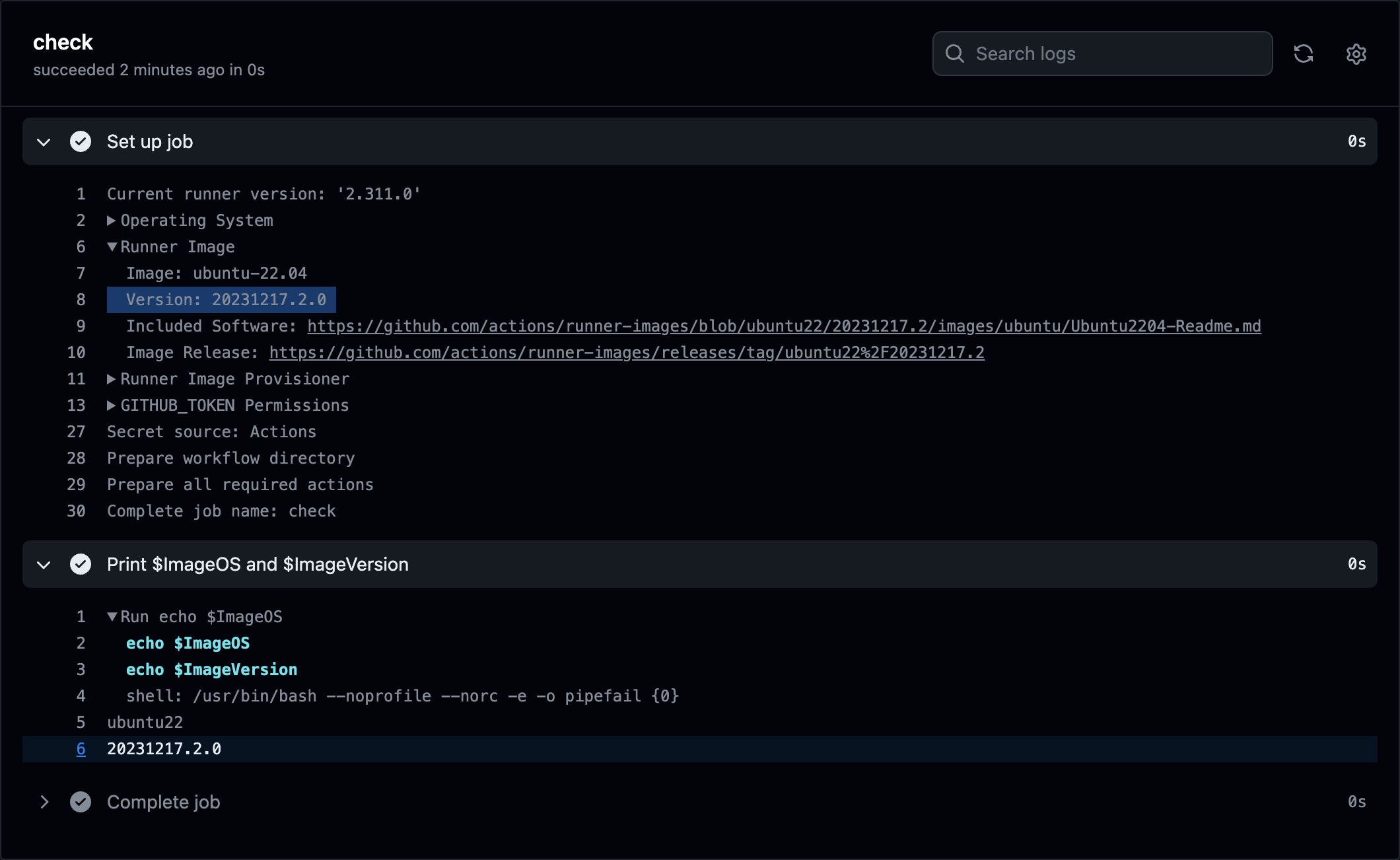

Getting the version at runtime

Now, how do I get the version of the image that is running the workflow? I

checked there is no documentation yet, but it seems to be

obtained from the ImageVersion environment variable as

follows:

But even if you get the image version, there's nothing you can do. Of course, it is possible to “store the versions of the running images in the parallel job, check them in the later job (the one that downloads the build directory, as described in the previous section), and stop execution if they are not all the same.” However, as we saw earlier, the image is updated so often that it is inconvenient for the CI to stop every time. Even so, in the previous example, you should check the installed version of CMake, not the image version.

Install the specified version of CMake

CMake is not a huge tool, and there are binary distributions for Windows, Linux, and macOS. Instead of using the preinstalled CMake in the image, how about installing your favorite version of CMake yourself?

There is a third-party action available on the marketplace to install CMake. In the first step of the workflow, try to install CMake by specifying the version as follows:

Now, even if different versions of the image mix, the error no longer occurs.

But there are other tools besides CMake that we depend on. What about Xcode, for

example? When it comes to something as big as Xcode, you don't want to install

it by specifying a version.

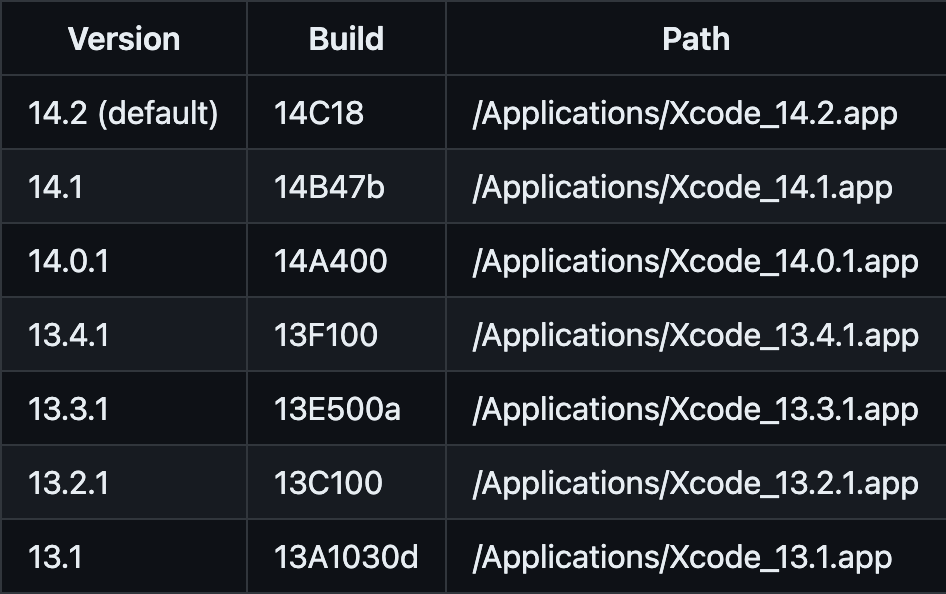

The Xcode versions installed on the current macos-12 image

are as follows:

For example, if you want to specify the Xcode version by the value of the

XCODE_VERSION environment variable, you can do the following:

- name: Select Xcode

run: sudo xcode-select -s "/Applications/Xcode_${XCODE_VERSION}.app/Contents/Developer"

The specified version of Xcode may not be available via image updates, but the frequency of such updates would be about once a year (based on the release of 13.1 in October 2021 and 14.2 in December 2022).

Finish the use of CMake in one job

Another idea to keep in mind is to avoid using a tool across instances. In our example above, this means that the instance using CMake should complete its use of CMake. To do this, we will no longer transfer the build directory created by CMake to the instance of the next job. Instead, we should run the tests, install the non-fat library in a temporary directory, and upload it with the first job in the same instance. Then we download all the non-fat libraries and create the xcframework with the last job. The workflow file would look like this:

jobs:

build_non_fat:

runs-on: macos-latest

timeout-minutes: 30

strategy:

max-parallel: 3

matrix:

abi: [x86_64, arm64]

sdk: [iphoneos, iphonesimulator]

exclude:

- abi: x86_64

sdk: iphoneos

steps:

⋮

- name: Run simulator and then test

if: ${{ matrix.sdk == 'iphonesimulator' && matrix.abi == 'x86_64' }}

run: |

⋮

ctest --test-dir build/iphonesimulator-x86_64 -C $BUILD_TYPE

⋮

- name: Install non fat files temporally

run: |

cmake --build build/${{matrix.sdk}}-${{matrix.abi}} --target install \

$HOME/tmp ...

- name: Archive artifacts

uses: actions/upload-artifact@v3

with:

name: non-fat-files-${{matrix.sdk}}-${{matrix.abi}}

path: ~/tmp

if-no-files-found: error

install:

runs-on: macos-latest

timeout-minutes: 30

needs: build_non_fat

steps:

⋮

- name: Download artifacts

uses: actions/download-artifact@v3

⋮

- name: Create xcframework

⋮

Of course, working with libraries built by the old compiler and with the new compiler can lead to errors, but we should be aware that reducing the number of fragmentation points will increase tolerance. If that's not possible, let's do parallel builds with a self-hosted runner.